A deep learning model with machine vision system for recognizing type of the food during the food consumption

Data collection

Collecting food product samples is essential for creating effective management systems that impact health and the environment. To address obesity, accurate data and intelligent systems are needed to ensure adequate food supply, reflecting the diverse cultural practices of different civilizations17,18.

In this study, a diverse data set was constructed featuring various popular foods from across Iran, which is known for its rich culinary culture. Selecting specific food items from 32 provinces proved to be quite challenging due to the vast array of delicious options available. The chosen foods reflect the interests and opinions of the public, cultural significance, and their prevalence in restaurants, hospitals, and university canteens, as well as insights from nutritionists. A total of 32 different types of foods were prepared, each representing various ingredients and preparations from all the provinces. To account for regional variations, samples of each dish were collected from different locations, including home cooks, restaurants, and canteens, since recipes can vary significantly. Ultimately, 16 primary food classes were selected, and an additional 16 products were included to enhance the data set’s potential for training a model capable of recognizing food during consumption. After this selection process, images and videos of the prepared dishes were captured under diverse conditions, including varying angles, backgrounds, and lighting. This comprehensive data set, reflecting different recipes and settings, was designed to train deep learning algorithms effectively, thus enhancing the potential for real-time food recognition in practical applications. In Fig. 2, several examples of selected food products are presented, which were extracted from videos. This showcases a variety of backgrounds, illustrations, angles, and distances used in this investigation to provide information about the morphology of the foods.

Source: Created by the authors.

Collection images of data.

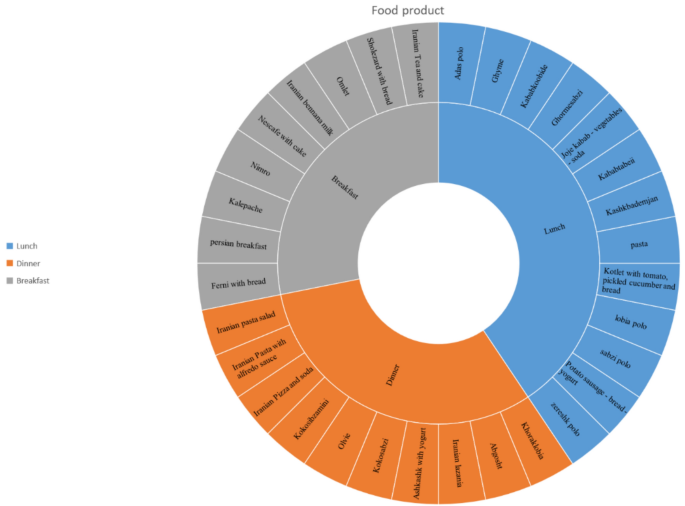

Food products were divided into three main meals: breakfast, lunch, and dinner. In each meal, there were several types of food classes, and it was tried to use one main meal in each class along with a drink or appetizer during imaging. Figure 3 shows food products with three main meals and 32 classes of selected subsets, along with the names of the food products.

Statistics meals, inner circle shows 3 main meal categories. The other circle shows the details of the main categories of the food.

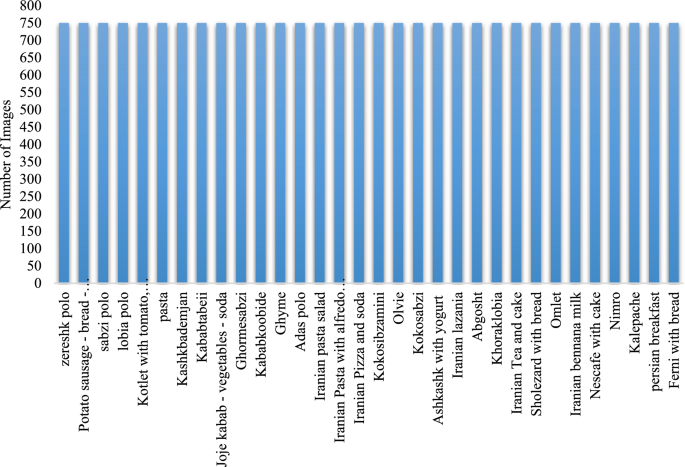

In order to perform the correct detection of food consumed products, images were first taken of the food products at the time of consumption. Because the system needed to be better analyzed and easier to use, the video was taken from each product at different conditions during food consumption. First, 16 classes of food were selected, and several videos were prepared. The images were extracted from the videos in half a second, using the Python programming language. For 16 classes, 12,000 images with 1280 × 680 dimensions were obtained from the beginning to the end of consumption. To increase the database, more videos were prepared, from which 24,000 images with 1280 × 680 dimensions were obtained for 32 classes of consumed foods. Figure 4 shows the number of images obtained of food in each class in a bar chart.

The number of images from each category.

Imaging system

The imaging system designed for identifying consumed food products comprises a camera mounted on a monopod, along with two belts for secure attachment. A mobile phone with an appropriate camera is connected to the monopod, which is affixed to the individual performing the sampling operation. The monopod is positioned on the left side of the person’s body, aligning the mobile phone camera nearly parallel to the shoulder. The camera angle is set at approximately 160° relative to the person. This angle may vary as the individual consumes food, resulting in body movements that create new angles with each frame of the video. To accommodate different environments and lighting conditions, various light sources are utilized, including moonlight and LED lamps. Figure 5 illustrates the arrangement of the cameras and associated equipment.

The placement of cameras and equipment for imaging food products.

Camera

The sampling camera in the food identification system consists of various models, including the Samsung S23, S23 Ultra, Huawei P50, P40, P30, and P30 Lite. Each of these cameras offers different qualities for recording videos and images. Additionally, multiple Canon and Nikon video and imaging cameras with 4 K resolution, capturing at 10 frames per second and 6.5 frames per second, were also utilized to provide a diverse array of devices for this purpose.

Computer and processing

Movies and images captured using an imaging system were transferred from mobile phones and cameras to laptops with the following specifications: CPU—AMD Ryzen 5 3500U, GPU—AMD Radeon Vega 8 Graphics, and RAM—8 GB, along with a 128 GB SSD, via 3USB port. In this machine vision system, the computer served as the primary source for image analysis and processing, capable of running a series of programs based on its processing power. Data preprocessing involved steps such as removing missing data and enhancing quality. Once the data was prepared, it was uploaded to Google Drive, enabling access via Google Cloud for dataset analysis. In this investigation, Python version 3.12 and Google Cloud were utilized to implement the deep learning algorithm. The deep learning algorithms, which require substantial processing power due to the large volume of data generated, relied on an efficient processing system, including support from Google Colab.

Data augmentation

Data augmentation is a technique for enhancing data by changing the size, intensity, color, and lighting for deep learning algorithms. This technique by expanding the diversity of a training dataset to original data sets was especially useful for improving the efficiency of deep learning algorithms, preventing over-fitting, and increasing the generalization ability of models19. To enhance object recognition, first, rotate the image by 10 to 15 degrees. Next, translate the food left and right to maintain object condition. Then, shear the image for a new angle. After that, zoom in or out to train the model on different scales. Finally, adjust contrast and brightness for varying lighting conditions. These five data augmentation steps increased the dataset of 16 classes from 12,000 to 60,000 and 32 class from 24,000 to 120,000.

Deep learning architecture

Brief introduction of convolutional neural networks (CNNs)

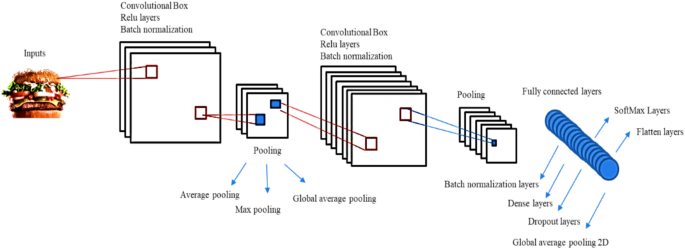

Convolutional neural networks (CNNs) represent a key advancement in deep learning, primarily used for image classification20,21. They consist of convolutional, combined, and fully connected layers that detail information of processing is showed in Fig. 6. The architecture typically involves preprocessing input images through resizing and quality enhancement before applying convolution with Relu and pooling layers. CNN training includes two stages: feedforward and backpropagation22. Initially, images undergo point multiplication with neuron parameters, followed by convolution operations. The network’s output is compared with the correct answer to calculate the error rate, which is used to adjust parameters. This iterative process continues until training is complete23.

Source: Created by the authors.

The structure of CNN used for feature extraction and recognition of the specific objects.

Hyperparameters

Hyperparameters in deep learning algorithms, such as batch size, optimizers (like Adam and Lion), learning rates, and others, significantly impact the model’s accuracy and loss. Adjusting these hyperparameters can improve the model’s performance by enhancing its convergence rate and increasing precision in recognition systems. The learning rate is an important hyperparameter in the training process of deep neural networks. The amount of learning rate has a direct impact on underfitting and overfitting. Another important hyperparameters, Optimizers adjust algorithm weights to minimize loss and enhance accuracy in deep learning. They help prevent overfitting, improve model predictions on unseen data, and increase training speed. Various optimizers were used in this investigation24,25. Also, The size of the images is the crucial parameter in preprocessing data for the deep learning algorithms; this parameter has a great impact on the training efficiency, model performance, hardware constraints, and architecture requirements26.At least, Batch size refers to the number of images processed together by the generator or model. It helps manage large datasets, reducing GPU and CPU usage. Overall, batch size impacts memory usage and training time, depending on both the batch size and image size27,28.

Implementing deep learning algorithms

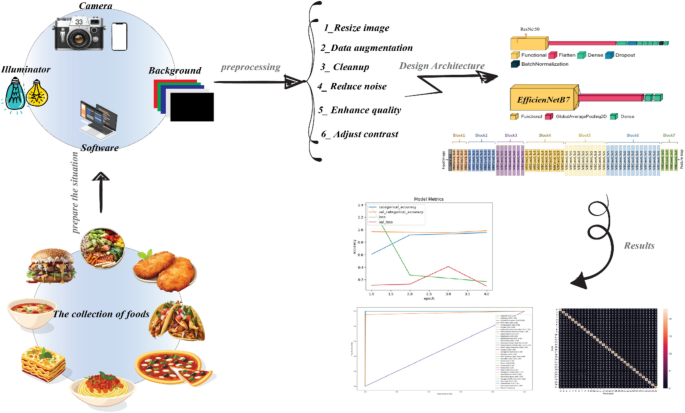

After preparing data set and transferring data for models, deep learning architectures should be adjusted based on complexity and volume of data set. Under the conditions of image capture and processing methods using a laptop for preprocessing and Google Colab for food analysis the best architecture was refined to achieve peak accuracy, as shown in Fig. 7. Deep learning architectures was implementing in three phases. First, 9 popular pretrain deep learning algorithms were selected to train on data set and selecting best architecture. In this step architecture first train on 16 classes of foods and then train on 32 classes. The information of deep learning architectures is showed in Table 1. After that, deep learning model enter in fine-tuning phase, this step should be done for decreasing computing time, but in complex, massive and innovative data set not showed reliable performance. Finally, hyperparameters of best architecture were adjusted based on increasing accuracy and performance of model behind decreasing train and response time of model.

Source: Created by the authors.

The route of preprocessing and recognition.

Ethics approval

All methods in this study were carried out in strict accordance with relevant ethical guidelines and regulatory frameworks to ensure the integrity and ethical soundness of the research. The experimental protocols were reviewed and approved by the Ethics Committee of the University of Tehran (approval number: 124/178439), adhering to the principles outlined in the Declaration of Helsinki and institutional ethical policies.

Participant selection and consent

Participants were selected from a diverse range of age groups and genders (16–60 years old), considering the variability in food consumption behaviors in front of a camera. This age range allowed for a comprehensive analysis of different consumption speeds, angles, and interactions with various food textures, ensuring a more robust dataset for model training. Prior to their participation, informed consent was obtained from all individuals, and in cases where participants were minors, consent was secured from their legal guardians. Participants were fully briefed on the study’s objectives, procedures, and their right to withdraw at any stage without any repercussions.

Data privacy and confidentiality

To uphold strict data privacy and confidentiality standards, all collected data were fully anonymized, and no personally identifiable information was stored. Video recordings and images were securely encrypted and stored on password-protected institutional servers, with access restricted to authorized research personnel only. Data collection and storage adhered to General Data Protection Regulation (GDPR) standards and institutional ethical policies. Furthermore, upon completion of the study, all personal data were de-identified and retained only in aggregate form to ensure privacy protection while maintaining the integrity of the research.

link

:max_bytes(150000):strip_icc()/VWH-GettyImages-959363550-49c7b161ca0445ffaeeb2ae43422ea19.jpg)

:max_bytes(150000):strip_icc()/health-benefits-of-lemon-water-realsimple-GettyImages-1222200342-678bdc8cef7349188289d4068230403f.jpg)